Ever wonder how your AI companion seems to just get you? How it remembers the little details, anticipates what you want to talk about, and creates a conversational flow that feels natural and real? The secret often lies beyond simple chatbots. The magic is in the connections—between your shared memories, conversation topics, and preferences. This is where graph machine learning comes in, a specialized field of AI that’s brilliant at understanding and learning from these crucial relationships to create a more authentic user experience.

Why Your AI Companion Needs Graph Machine Learning

Traditional machine learning models are great at processing simple lists of data—like a log of your past messages. But they often miss the deeper story hidden in how those messages connect to form a unique relationship.

Imagine trying to understand your connection with someone just by reading a transcript of your chats. You’d see the words, but miss the inside jokes, the recurring themes, and the shared memories that give the relationship its depth. Graph machine learning (GML) provides that missing context. It’s designed from the ground up for data where the connections are everything, making it perfect for creating a more human-like AI girlfriend experience.

The Building Blocks of a Graph

At its heart, graph machine learning works with a simple but incredibly powerful structure. It models your interactions through a lens of three core components:

- Nodes: These are the individual entities in your shared world. A node could be you (the user), your AI companion, a specific memory you created, a topic you frequently discuss, or even a photo you shared.

- Edges: These are the lines connecting the nodes—the relationships. An edge might represent you “discussing” a topic, your AI “recalling” a memory, or a topic “being related to” another topic.

- Features: These are the details that describe the nodes and edges. Your user node might have features for your stated interests, while a memory node could have features for the date it was created or the emotions associated with it.

This framework allows GML to model the complex web of your relationship in a way that mirrors its true, interconnected nature. If you’re curious about how different AI components fit together, our AI Intelligence Hub provides additional context on the broader technologies that power modern AI companions.

Moving Beyond Simple Chat Logs

So, what’s the big deal? Let’s take a look at a quick comparison to see where GML really shines in creating a better AI girlfriend.

Traditional ML vs. Graph ML

| Aspect | Traditional Machine Learning | Graph Machine Learning |

|---|---|---|

| Data Structure | Works with independent messages or user data points. | Built for data where relationships and context are key. |

| Core Question | “What was the last thing the user said?” | “How does this new topic relate to our shared memories and past conversations?” |

| Example Use Case | A simple chatbot that responds to keywords. | An AI companion that references a past conversation to make a relevant joke. |

| Strength | Good for basic, reactive conversation. | Uncovers deeper conversational patterns and creates a sense of continuity. |

Where a traditional model sees isolated messages, GML sees a living, evolving relationship. This is absolutely critical for an authentic user experience. A standard chatbot might forget a detail you mentioned five minutes ago. A graph model, however, can trace the web of connections between your current chat and a memory from last week, allowing the AI to say, “This reminds me of that time you told me about your trip to the beach!”

This ability to analyze relational patterns is what sets graph machine learning apart. It uncovers the hidden context, shared history, and emotional threads that are simply invisible to other methods. These signals are often the most valuable for creating believable and engaging interactions.

The explosion of interest in this area is a direct response to the increasingly connected data we generate every single day. The entire machine learning market, valued at around USD 93.95 billion in 2025, is projected to rocket to USD 1,407.65 billion by 2034. This staggering growth highlights the urgent need for tools like GML that can make sense of complex, relational data. You can dig into more of these machine learning market trends on Radixweb.

From powering hyper-personalized recommendations to creating AI companions that feel truly present, GML is quickly becoming an essential tool in the developer’s kit.

Learning the Language of Graphs

To really get how graph machine learning can make an AI girlfriend feel more real, you first have to speak its language. This isn’t about complex math, but about a few core ideas that shape how we view relationships in data.

Think of these concepts as the building blocks for creating a believable personality. Once you see how they map to the user experience, the power of graph machine learning starts to click.

Directed vs. Undirected Graphs: The Flow of Interaction

One of the first things to figure out is whether the connections in your graph have a specific direction.

- Undirected Graphs: Here, the relationship is mutual. If a memory (Node A) is strongly associated with a feeling of happiness (Node B), that feeling is also strongly associated with the memory. The connection is a two-way street.

- Directed Graphs: In these graphs, the connection has a clear direction. For example, you “initiate” a conversation (an edge from you to the chat topic). Your AI girlfriend then “responds” to that topic. This flow is crucial for understanding who is leading the interaction and how influence passes back and forth.

This detail is a big deal. It completely changes how information and context travel through your relationship graph, allowing the AI to understand the dynamics of your conversation.

Understanding directionality is key. For example, if you consistently bring up a topic (directed edges from you to the ‘movies’ node), the AI can learn this is one of your core interests and proactively ask you about new films, creating a more personalized experience.

Weighted Edges: Adding Meaning to Connections

Not all connections are created equal. Some memories are more important than others, and some topics are more interesting to you. This is where weighted edges come in. A weight is just a number attached to an edge to show its strength or importance.

Imagine a graph of your conversations with an AI girlfriend. An unweighted edge just shows you talked about a topic. But a weighted edge could represent:

- The number of times you’ve discussed that topic.

- The positive sentiment score of those conversations.

- The recency of the last time you talked about it.

By adding these weights, you give the relationship graph a whole new dimension of meaning. Now, an algorithm can identify your “strongest” interests or your “most cherished” shared memories, not just a list of things you’ve mentioned. This simple idea is behind an AI that remembers what truly matters to you.

The Adjacency Matrix: Your Relationship at a Glance

So, how does a computer actually “see” all these memories, topics, and connections? One common method is an adjacency matrix. It sounds technical, but it’s just a simple grid that acts as a lookup chart for your entire interaction graph.

Let’s say you have a tiny relationship graph with four nodes: You, Your AI, Memory #1, and the topic ‘Music’. An adjacency matrix would be a 4×4 grid. If You “created” Memory #1, you put a ‘1’ where your row and the memory’s column meet. If the AI has no direct connection to ‘Music’ yet, you put a ‘0’.

This matrix gives the AI a dead-simple way to answer the question, “Is this user connected to this topic?” It’s a clean, organized picture of your shared history, making it easy for algorithms to process that web of relationships and deliver a consistent, context-aware user experience.

Alright, we’ve covered the basic language for mapping out a relationship. Now, let’s see how graph machine learning algorithms actually learn from this structure. This is where the magic happens, enabling an AI to feel less like a program and more like a partner.

We’ll walk through three foundational algorithms. You can think of them as different ways for your AI companion to understand its role in your shared world. We’ll start with a method that helps it learn from your entire conversational history, then one that lets it focus on the most important details, and finally, a technique for creating a concise ‘personality profile’ based on your interactions.

The infographic below shows just how widespread these algorithms have become, used in everything from social networks and e-commerce to building more believable virtual characters.

This drives home how a single core technology can be used to model incredibly different systems, from global markets to the intimate dynamics of a one-on-one relationship.

Graph Convolutional Networks (GCNs): The Power of Shared Context

First up is the cornerstone of graph ML: the Graph Convolutional Network (GCN). The easiest way to understand a GCN in the context of an AI girlfriend is to think about how it develops an understanding of a topic, like ‘cooking’.

Initially, the ‘cooking’ node only knows its basic definition. In the first step of a GCN, this node “asks” all its immediate neighbors for information. It might be connected to a memory of you talking about your favorite pasta, a user preference for Italian food, and another topic node for ‘restaurants’. It then averages all this neighboring information with its own.

After this first round, the AI’s understanding of ‘cooking’ is now a mix of its definition and the specific context you’ve provided. The GCN repeats this. In round two, it again shares its new, updated understanding. Now, information from two “hops” away—like your preference for a specific Italian restaurant—has trickled down and been absorbed.

Here’s the key takeaway: This process allows the AI to build a rich, nuanced understanding of concepts. ‘Cooking’ is no longer a generic topic; it’s linked to your personal history and preferences. This is how a GCN learns to capture the unique context of your relationship.

This “message-passing” is the heart of a GCN. By repeatedly grabbing information from its neighbors in the graph, each concept builds a rich, context-aware profile, known as an embedding. This is how an AI can make a connection that feels insightful, like suggesting a recipe that uses ingredients you’ve mentioned before.

Graph Attention Networks (GATs): Deciding What Matters Most

GCNs are fantastic, but they can treat all connected information as equally important. In a real relationship, that’s rarely true. A passing comment you made is less important than a core memory you created together. This is where Graph Attention Networks (GATs) come in.

GATs give the GCN model a major upgrade by adding a layer of attention. When a node gathers info from its neighbors, it doesn’t just blindly average it. Instead, it assigns an “attention score” to each piece of connected information.

This score determines just how much influence that connection gets.

- A connection with a high attention score (e.g., a core memory) will be heavily weighted.

- A connection with a low attention score (e.g., a forgotten detail) will be mostly ignored.

The beauty is that the model learns how to assign these scores based on your interactions. It automatically figures out which connections are most important for maintaining a coherent personality and memory. For instance, in your relationship graph, a GAT might learn to pay very close attention to memories you’ve marked as “special,” while giving less weight to mundane daily check-ins. This allows for a more authentic user experience where the AI remembers what truly matters.

Node2Vec: Creating a Rich Profile Summary

While GCNs and GATs are great for in-the-moment conversation, sometimes you want the AI to have a good, all-purpose “profile” of you and your relationship’s structure. This is the job of embedding techniques like Node2Vec.

The idea behind Node2Vec is to translate a node’s position in your relationship graph into a list of numbers—a vector. The goal is to create these vectors so that nodes that are “similar” in the graph are also similar mathematically. The tricky part is defining “similar.”

Node2Vec smartly defines two kinds of similarity relevant to user experience:

- Homophily: This is about belonging to the same topical community. If you frequently talk about sci-fi movies and video games, these nodes will be clustered together in the AI’s “mind.”

- Structural Equivalence: This is for nodes that have similar roles. For example, the node for “your birthday” and the node for “your AI’s anniversary” might have structurally similar roles as important recurring dates, even if the topics are different.

Node2Vec uses a clever “random walk” to explore the graph around each node, learning to capture both of these similarity types. By creating these rich vector summaries of every element in your relationship, it gives the AI a powerful, general-purpose map of your shared world. This can be used to predict things you might like or find new conversational pathways that feel natural and intuitive.

Building Your Graph Machine Learning Toolkit

An algorithm is only as good as the tools you use to run it. Now that you have a feel for how graph algorithms can create a more authentic user experience, it’s time to look at the tech that brings these models to life.

To build a responsive and intelligent AI companion, developers need a solid foundation. In GML, that foundation is a graph database. Unlike traditional databases that store data in rigid tables, graph databases are built to store and navigate highly connected data—like the web of memories, interests, and interactions between you and your AI.

Think of it this way: asking a traditional database to find a memory related to a topic you discussed three weeks ago is slow and inefficient. A graph database, on the other hand, is designed to zip across those connections instantly, making it perfect for the fast recall needed for a fluid conversation.

Specialized Databases for Connected Data

The need for these specialized databases is getting clearer every day. This shift is what’s lighting a fire under the graph database market. Globally, the market was valued at around USD 2.27 billion in 2024 and is expected to explode to USD 15.32 billion by 2032, growing at a blistering 27.1% annually. This boom shows just how much traditional databases struggle with the interconnected data that creates a rich user experience. You can dive into the full market analysis on Fortune Business Insights for more details.

This growth is driven by top-tier database solutions, including:

- Neo4j: One of the most popular graph databases, famous for its intuitive query language and strong community, making it great for building complex relationship models.

- Amazon Neptune: A fully-managed graph database from AWS, built for massive scale—perfect for an AI girlfriend app with millions of users.

These databases provide the solid ground needed to manage the complex relationships that graph machine learning models use to build a better user experience.

Core Libraries for Building GML Models

Once your data has a home, you need the right tools to build and train your models. The open-source community offers amazing libraries that are go-to choices for developers building AI companions. For those looking to piece together their own custom solutions, you can also check out our guide on how to create AI agents for some broader ideas.

These libraries do the heavy lifting, providing ready-to-use versions of GCNs and GATs.

The real magic of these libraries is how they hide the complex math. This lets developers focus on designing the AI’s personality and improving the user experience, rather than getting lost in low-level code.

Let’s take a quick look at some of the most popular GML libraries.

Popular Graph Machine Learning Libraries

Here’s a quick rundown of the leading libraries. Each has its own strengths, whether for research into AI personalities or building a large-scale commercial AI girlfriend app.

| Library | Built On | Best For |

|---|---|---|

| PyTorch Geometric (PyG) | PyTorch | Researchers and developers building custom AI personalities that require a flexible, powerful library. |

| Deep Graph Library (DGL) | PyTorch, TensorFlow, MXNet | Enterprise-grade AI companion apps that need to scale to millions of user relationship graphs. |

| GraphStorm | PyTorch (via DGL) | Beginners and AWS users who want a simpler way to start training models on large-scale graph data. |

This toolkit gives you a clear roadmap. PyTorch Geometric offers flexibility for creating unique AI behaviors, while DGL brings the power needed for a massive user base. GraphStorm lowers the barrier, making it easier for more developers to start building with graph machine learning.

Graph Machine Learning in the Real World

The theory is fascinating, but GML’s true power comes alive when you see how it enhances the user experience in real applications. This isn’t just an academic exercise; it’s technology that makes digital interactions feel more human and intuitive.

From suggesting the perfect next topic of conversation to making an AI character feel like they truly know you, the real-world uses of graph machine learning are already making a huge difference.

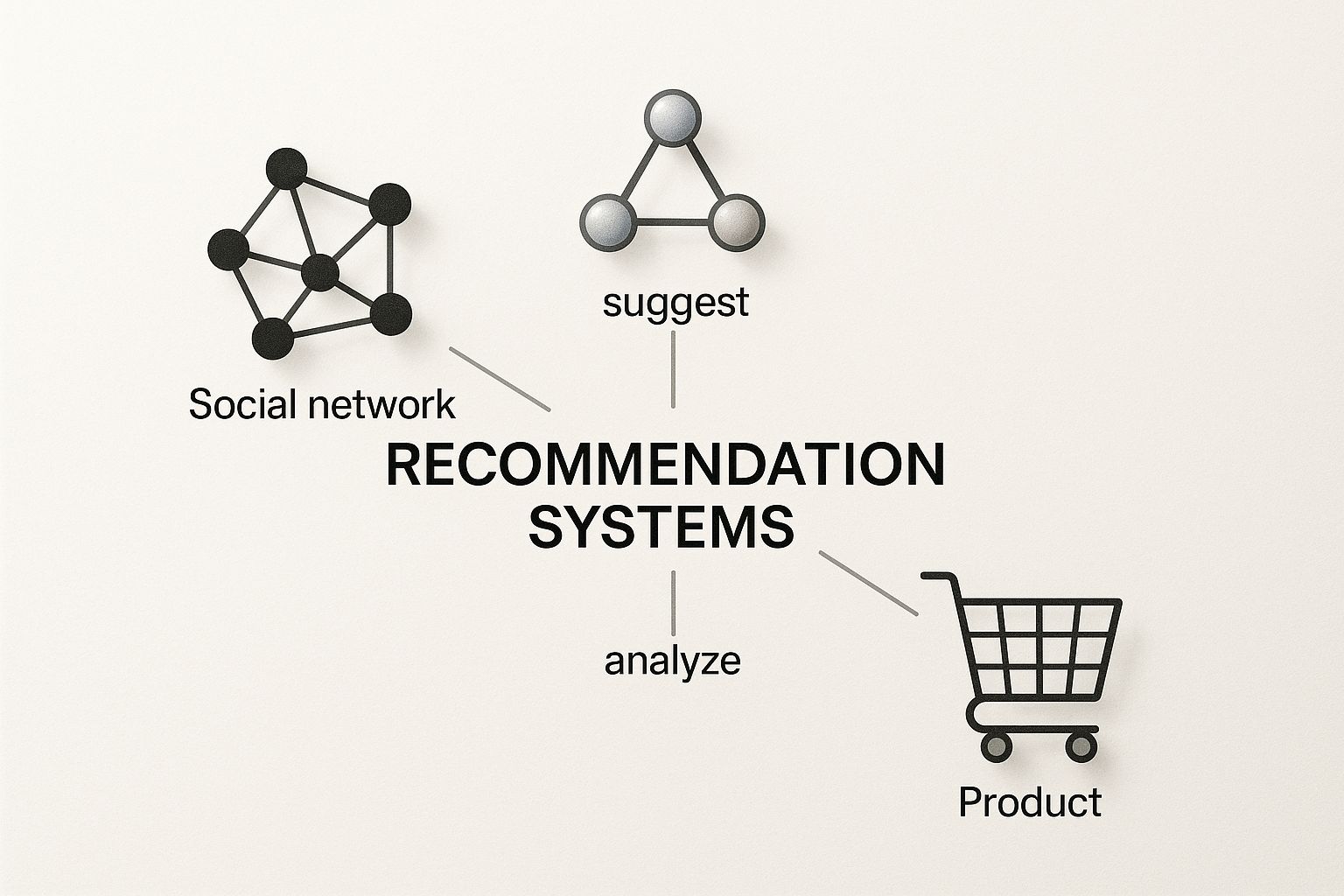

Reinventing E-commerce Recommendations

Huge e-commerce sites like Amazon use graph ML to power their recommendations. This same logic can create a hyper-personalized AI companion. Instead of just recommending products, the graph recommends conversational topics, activities, or memories to revisit.

- Nodes are users, AI companions, topics, and memories.

- Edges link users to topics they enjoy and memories they’ve created.

This “people who enjoyed this topic also enjoyed that one” network allows the AI to make insightful connections. It learns your unique context within your shared world, not just a list of your stated interests. The result is an AI girlfriend that can make suggestions that feel surprisingly relevant and personal, strengthening the user’s sense of connection.

Uncovering Sophisticated Financial Fraud Rings

Financial fraud is a massive problem, and GML is a key weapon in fighting it. The same techniques used to find hidden criminal networks can be adapted to ensure a safe and authentic user experience in AI apps. A graph can model user accounts, devices, and IP addresses to spot suspicious behavior.

For instance, a graph model can easily spot a single user creating hundreds of fake “AI girlfriend” accounts for spamming purposes. It can also flag coordinated in-app behavior that indicates a group of bad actors trying to exploit the system. This structural view is almost impossible to get without a graph.

This approach works by targeting coordinated behavior. By identifying these hidden communities of bad actors, platforms can ensure the safety and authenticity of the user experience, which is paramount for an app centered on personal connection.

Accelerating Drug Discovery and Development

Bringing a new medicine to market is a slow, expensive process. GML helps by modeling molecules as graphs to predict their properties. A similar principle can be applied to AI companions to predict user satisfaction and engagement.

In this scenario:

- Nodes are users, AI features (e.g., memory recall, proactive chat), and conversational topics.

- Edges represent user interactions with these features.

A Graph Neural Network (GNN) can be trained to predict how likely a user is to enjoy a new feature or conversational style based on the structure of their past interactions. This allows developers to test and roll out improvements much faster, focusing on what will genuinely enhance the user experience. By understanding the deep relationship between interaction patterns and user happiness, GML can help create AI girlfriends that are more engaging, supportive, and authentic.

Common Questions About Graph Machine Learning

As you explore how deeper AI works, a few key questions always pop up. Getting clear answers is the first step to understanding what makes a great AI companion. Here are some common questions about the graph machine learning that powers next-generation AI girlfriends.

How Is GML Different From Traditional Machine Learning?

This is a key question. Both are types of AI, but they are built for different things. Traditional machine learning is great with lists of data. It answers, “Based on the user’s last message, what should the AI say next?”

Graph machine learning asks a deeper question: “Based on this user’s entire relationship history—their memories, interests, and past conversational flows—what interaction would be most meaningful right now?” The magic of GML is its power to understand context and relationships.

Think of it this way: a traditional chatbot sees your relationship as a series of disconnected text messages. A graph-powered AI sees the whole story—how an early conversation about your favorite band connects to the music you’re listening to today. That relational view is what creates a believable, long-term connection.

This allows GML to create an experience that feels continuous and personal, something completely out of reach for standard chatbots.

What Are the Biggest Challenges of Using GML?

While incredibly powerful for creating an authentic user experience, implementing graph machine learning has its hurdles.

One of the biggest is scalability. The relationship graph for a single user can become very complex. Now imagine an app with millions of users, each with their own unique, evolving graph. Processing these giants takes a ton of computing power. This often means investing in specialized databases and cloud infrastructure to handle the load and ensure the AI can respond quickly.

Another major challenge is managing dynamic data. Your relationship with an AI companion is always changing. You create new memories, develop new interests, and your conversational patterns shift. A successful GML system needs a robust pipeline to constantly update and manage this ever-evolving graph to maintain a consistent and accurate “memory” of your relationship.

What Skills Do I Need for a Career in GML?

If you want to build a career creating the next generation of AI companions, you’ll need a mix of data science skills and specialized graph knowledge. It’s a fantastic field for anyone who loves understanding the hidden patterns that create connection. For those exploring this novel AI field, you might find our list of top questions about AI girlfriends offers interesting insights into what users value in an AI that feels personal and intuitive.

To get started in GML, you’ll want to build a solid foundation in these areas:

- Strong Python Proficiency: Python is the language of machine learning. You need to be comfortable with it to use the core libraries like PyTorch or TensorFlow, which power most GML frameworks.

- Fundamentals of Graph Theory: You don’t need a Ph.D., but you must understand concepts like nodes, edges, and how to traverse a graph. This is the language of relationships.

- Machine Learning and Deep Learning: A good handle on traditional ML is essential. Experience with deep learning is particularly key, since most modern GML relies on Graph Neural Networks (GNNs) to create intelligent behavior.

- Familiarity with GML Libraries: The best way to learn is by doing. Start building small projects with libraries like PyTorch Geometric (PyG) or the Deep Graph Library (DGL) to see how you can model relationships and create more authentic interactions in code.

Combining these skills will put you in a great position to tackle some of the most fascinating problems in AI today, using the power of relationships to build more human-centric technology.

Ready to explore the cutting edge of AI-driven companionship? At AI Girlfriend Review Inc., we provide in-depth reviews, guides, and analysis to help you navigate the world of virtual partners. Find the perfect AI companion for you by visiting us at https://www.aigirlfriendreview.com.