At a high level, building an AI agent is pretty straightforward. You give it a goal, a set of tools (like access to an API or web search), and an LLM to act as its brain. The agent then gets to work in a loop: it looks at the current situation, thinks about what to do next, and uses a tool to take action. It repeats this cycle until the job is done.

Why AI Agents Are Reshaping Development

Before we jump into the code, it’s worth taking a moment to appreciate why building AI agents is such a huge leap forward. We’re not talking about simple automation scripts anymore. Modern AI agents are dynamic systems that can reason, plan, and act on their own to tackle complex, multi-step problems.

This shift has been a long time coming. The journey started decades ago, with the groundwork being laid as early as 1950 when Alan Turing proposed his famous test for machine intelligence. The field really took off at the 1956 Dartmouth Conference, and by 1988, we saw breakthroughs in reinforcement learning that taught agents to learn from rewards and punishments—a concept that’s still fundamental today. You can get a good overview of this foundational history from a great piece on the evolution of AI agents.

From Rigid Rules to Flexible Reasoning

Older AI systems were stuck in their ways, constrained by rigid, rule-based logic. They worked fine in predictable settings but completely fell apart when things got messy or required a creative solution. If a scenario didn’t perfectly fit a predefined rule, the system would just fail.

This made them pretty useless for most real-world challenges. Think of an old-school customer service chatbot that could only follow a strict script. The second you asked an unexpected question, it was game over. For an AI girlfriend, this would mean a conversation that feels repetitive and robotic, completely breaking the illusion of a genuine connection.

The core difference is this: a traditional script follows a path you define, while an AI agent finds its own path to a goal you set. This autonomy is what makes them so powerful for developers.

The Impact of LLM-Powered Agents

The arrival of powerful Large Language Models (LLMs) changed everything. By using an LLM as a reasoning engine, we can now build agents that have a kind of common-sense understanding.

These new agents can:

- Deconstruct Vague Goals: They can take a fuzzy objective like “research the best AI companion apps” and break it down into a series of concrete, actionable steps.

- Adapt to New Information: An agent can read the results of a web search or an API call and adjust its strategy on the fly.

- Select the Right Tools: It can intelligently decide whether it needs a web browser, a calculator, or a code interpreter to handle the immediate task.

This incredible flexibility is why frameworks like LangChain have exploded in popularity. They provide the essential scaffolding that connects the LLM brain to its tools and memory. This means you can focus on your agent’s unique logic instead of getting bogged down building the complex mechanics from scratch, which massively speeds up the development process.

The Anatomy of a Modern AI Agent

Before you can build a truly useful AI agent, you’ve got to understand what’s under the hood. Think of it like building a custom PC—every component has a specific job, and they all need to sync up perfectly. A modern agent isn’t just a chatbot. It’s a complex system designed to think, use tools, and remember past conversations to get things done.

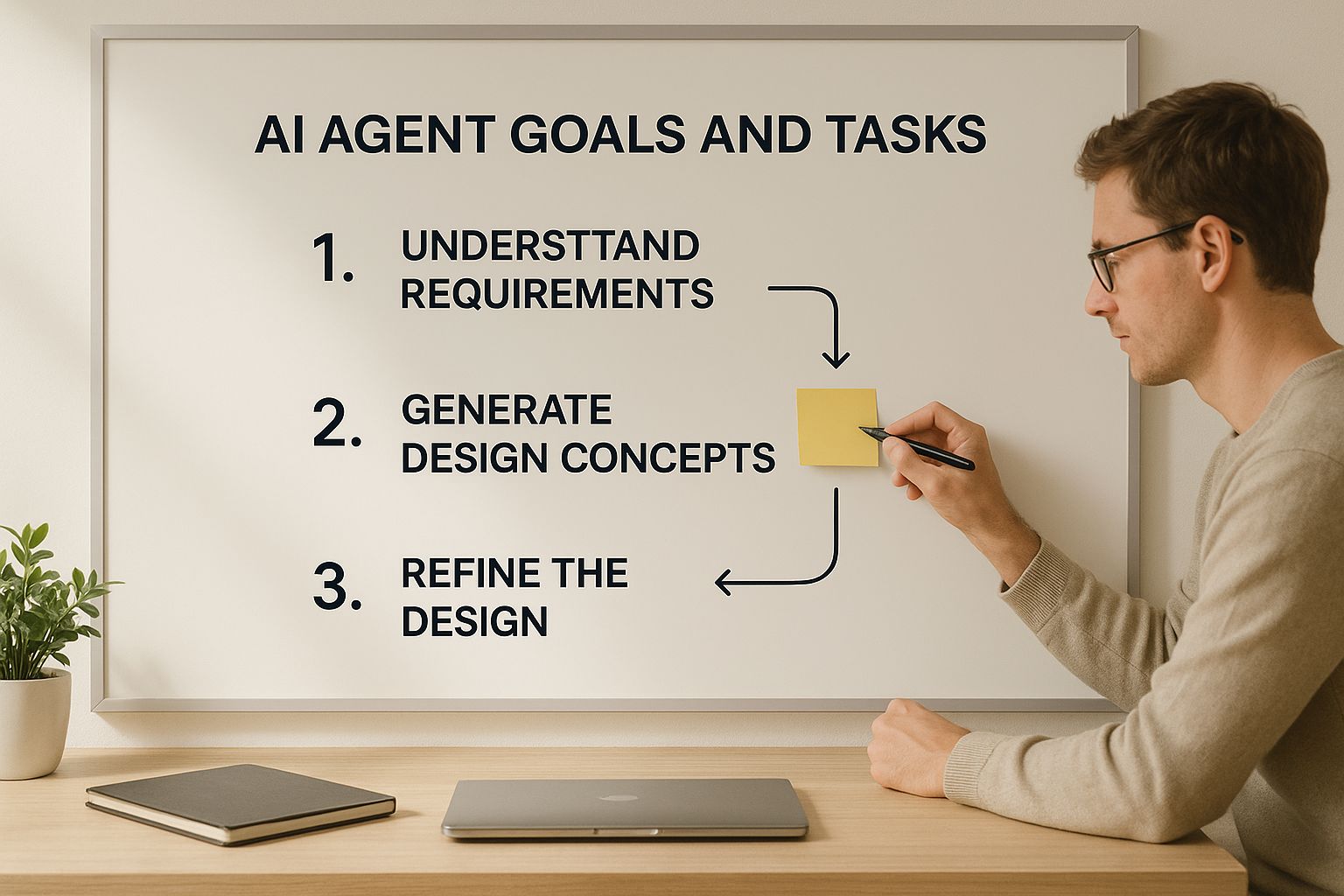

This whole process is a constant cycle of observing, thinking, and acting to hit a goal.

As the image shows, building an agent is less like traditional coding and more like a feedback loop. You define the goal, give it tools, and let it run. It’s this dynamic process that allows the agent to figure things out and adapt on the fly.

To really get a grip on this, we need to break down the essential pieces that make up any agent you’ll build. Each part plays a critical role, and understanding how they interact is the key to creating something that’s not just clever, but actually effective.

Key AI Agent Components and Their Functions

| Component | Function | Example Implementation |

|---|---|---|

| LLM (The Brain) | The core reasoning engine. It interprets goals, makes decisions, and plans the next steps. | Using OpenAI’s GPT-4 to understand the user’s request and decide which tool to use. |

| Tools (The Hands) | External functions and APIs that allow the agent to interact with the real world and perform actions. | A custom function for an AI girlfriend that can generate a new selfie image on request. |

| Memory (The Context) | Stores conversation history and the results of past actions to inform future decisions. | For an AI girlfriend, storing shared memories like “our first date” or “my favorite color.” |

Mastering how these three core components—the LLM, the tools, and the memory—work together is the foundation of building powerful and reliable AI agents. Let’s dig a little deeper into each one.

The Agent’s Brain: The Large Language Model

At the very heart of any AI agent sits a Large Language Model (LLM). This is the ‘brain’ of the operation. It’s the engine that processes information, makes judgments, and lays out a plan of attack. When you feed an agent a goal, the LLM is what deciphers your intent and figures out the most logical next move.

Let’s say you’ve built a personal finance agent and you ask it, “Find my biggest spending category this month.” The LLM doesn’t just read that as a simple text string. It grasps the actual goal: analyze expense data and pinpoint the maximum value. From there, it decides which tool it needs, like a database query or a data analysis function.

Your choice of LLM—whether it’s from OpenAI, Anthropic, or an open-source model—will have a massive impact on your agent’s capabilities. Some models are rock stars at logical reasoning, while others shine in creative writing. An AI companion app, for example, would probably benefit from a model fine-tuned for empathetic dialogue, which is a world away from a model built to generate code.

The Toolkit: Giving Your Agent Capabilities

A brain is pretty useless without hands. Tools are what give your agent those hands—they are the external functions and APIs that let it interact with the world outside its own code. This is what truly separates a simple chatbot from a functional agent.

You can give your agent access to almost anything:

- Web Search: Let it look up current events, stock prices, or product reviews.

- Database Access: Give it the power to query specific information, like a customer’s order history or your own spending log.

- Image Generation: For an AI girlfriend, a tool to create and send a selfie is a powerful way to enhance the user experience.

- Custom APIs: Hook it up to other software, like sending an email via an API or posting an update to a team chat.

Think back to our finance agent. Once the LLM decides it needs to analyze your spending, it would call its “database query” tool to pull the necessary transaction data. Without that tool, all its reasoning would be stuck in its head with no way to act.

The Memory: Providing Context for Coherent Action

Finally, for an agent to tackle any task that takes more than one step, it needs memory. This component is what holds the history of the conversation and the outcomes of previous actions. It’s the glue that provides context for every subsequent move.

Memory is what keeps the agent from being that frustrating conversational partner who forgets your name every two minutes. It enables a coherent, multi-step thought process.

Without memory, an agent can’t learn from what it just did. It’s the difference between a one-off calculator and an AI girlfriend who remembers your birthday, inside jokes, and past conversations, making the interaction feel deeply personal and authentic.

This observation-thought-action cycle—fueled by the LLM, tools, and memory—is the fundamental architecture you’ll be working with. Once you get the hang of how these three parts play together, you can start building AI agents that are truly useful.

Choosing Your AI Agent Development Stack

Alright, this is one of the most important decisions you’ll make. Picking the right tools for your AI agent isn’t just about grabbing what’s popular; it’s about building a foundation that will directly affect your agent’s smarts, speed, and how much it costs you to run. The choices you make right here define what your agent can do and how you’ll actually put it together.

The whole process of creating an AI agent really boils down to two huge choices: the Large Language Model (LLM), which is the “brain,” and the agentic framework, which acts as its nervous system. These two are tightly linked, and getting it right from the start will save you a world of hurt later on.

Selecting the Right LLM Brain

The LLM is the reasoning engine at the core of your agent. You’ll quickly find that different models have their own personalities, strengths, and quirks. A model that’s a genius at writing Python code might be incredibly awkward at holding a simple, empathetic conversation. This is exactly why your specific goal—like building an AI companion—is so critical to keep in mind.

Here’s a quick rundown of the major players I’ve worked with:

- OpenAI’s GPT Series (GPT-4, GPT-3.5): These are the big names, often seen as the industry standard for general reasoning and tackling complex instructions. GPT-4, in particular, has serious analytical muscle, making it a go-to for agents that need to chew through multi-step problems.

- Anthropic’s Claude Family (Claude 3 Opus, Sonnet): I’ve found that Claude models often shine with their more natural, conversational style. They also have a heavy focus on safety, which is a big plus. For something like an AI girlfriend or a companion app, Claude’s talent for nuanced dialogue can create a much more believable and engaging experience.

- Open-Source Models (Llama 3, Mistral): If you want maximum control and are looking to scale without massive API bills, open-source is the way to go. Models like Llama 3 and Mistral demand more technical know-how to get up and running, but they let you customize everything. This is perfect if you’re building an agent with a highly specific persona or knowledge base.

Your LLM choice isn’t just a technical spec; it’s the personality of your agent. For a project like an AI girlfriend, a model’s ability to generate creative, empathetic, and flowing dialogue is way more important than its raw logical power.

Comparing Key LLM Trade-Offs

When you’re picking a model, you’re always playing a balancing act between three things: reasoning quality, speed, and cost. There’s no single “best” model, only the best one for your project.

| Factor | High-End Models (GPT-4, Claude 3 Opus) | Mid-Tier Models (GPT-3.5, Claude 3 Sonnet) | Open-Source Models (Llama 3) |

|---|---|---|---|

| Reasoning | Top-tier for complex, multi-step tasks and understanding nuance. | Very solid for most common tasks but can sometimes fumble with high complexity. | Performance is all over the map; can be amazing when fine-tuned for a specific job. |

| Speed | Usually slower because of their massive size. Slower responses can hurt the user experience in a chat app. | Much faster response times, which feels better for real-time chats. Critical for an authentic AI girlfriend experience. | Speed is on you—it completely depends on the hardware you run it on. |

| Cost | The most expensive per API call. This can add up fast with a busy agent. | A much more balanced and budget-friendly choice for high-volume apps like AI companions. | No API fees, but you’re paying for the hosting and upkeep yourself. |

For instance, if I were building a research agent to sift through dense legal documents, I’d lean on GPT-4’s power. But for an AI companion chatbot, a faster and cheaper model like Claude 3 Sonnet would deliver a much better user experience.

Choosing Your Agentic Framework

Once you have the brain, you need the plumbing to connect it to tools and give it a memory. That’s where agentic frameworks like LangChain and LlamaIndex come in. They handle all the boilerplate code that you really don’t want to write yourself.

LangChain: I think of LangChain as the “Swiss Army knife” for building agents. It’s got a huge library of integrations and pre-made components for everything from chaining LLM calls to managing tools and memory. It supports both Python and JavaScript, so it’s open to a lot of developers. LangChain is a fantastic pick if your agent needs to juggle many different tools or execute complex, multi-step plans.

LlamaIndex: While LangChain is the all-rounder, LlamaIndex is a specialist. Its main mission is to help you build agents that can reason over your own private data. If your number one goal is creating an agent that can answer questions about your documents, PDFs, or databases, LlamaIndex is often the faster, more optimized path. It’s a beast at Retrieval-Augmented Generation (RAG), which is the core tech for any good question-answering bot.

Your choice here really depends on your project’s main purpose. Building a complex, action-oriented agent that calls multiple APIs? LangChain is probably your best bet. Is your agent’s primary job to be an expert on a specific set of documents? LlamaIndex will get you there with less fuss.

Building Your First Agent with LangChain and Python

Alright, enough theory. Let’s roll up our sleeves and actually build something. In this section, we’re going to create a functional research assistant agent from scratch using Python and the incredibly useful LangChain framework. I’ll walk you through everything, from setting up the workspace to watching the agent think and act on its own.

This is where the magic really happens. Seeing an agent come to life demystifies the whole process and shows you the practical steps behind the high-level concepts. The principles you’ll learn here are the bedrock for building almost any kind of agent, from a simple research tool to more complex, interactive companions. For example, the same core logic of giving an agent tools and a goal applies if you want to explore how to create your own AI girlfriend, though the tools would be things like dialogue generators and memory systems instead of a web search.

Setting Up Your Workspace

First things first, let’s get your environment ready to go. You’ll need Python installed on your machine. We’ll be using a couple of key libraries that you can install with pip, Python’s package manager.

Just open your terminal or command prompt and run these commands:

pip install langchain langchain-openai

This simple command installs two crucial packages. LangChain is the framework that gives our agent its structure, and langchain-openai is the bridge that lets us connect to powerful models from OpenAI, like GPT-4 or GPT-3.5-turbo. You’ll also need an API key from a provider like OpenAI to give your agent access to the LLM.

Initializing the Core Components

With our workspace prepped, it’s time to define the agent’s core components: the LLM (its brain) and the tools (its hands).

The agent’s “brain” will be an LLM from OpenAI. When we write the code, we’ll initialize it and specify which model to use. For a straightforward research task like this, a model like gpt-3.5-turbo is a fantastic choice—it’s fast, capable, and won’t break the bank.

Next up, the tools. A research assistant is pretty useless if it can’t look things up. So, we need to equip it with access to up-to-date information. We’ll give it a web search function and throw in a calculator for any math problems it might run into. LangChain makes this super easy by providing pre-built tools we can just import and plug in.

Understanding the ReAct Framework

Before we wire everything together, it’s really important to get a handle on the logic our agent will use. We’re building what’s known as a ReAct agent, which stands for Reasoning and Acting. This framework is what allows the LLM to “think out loud,” breaking a complex problem down into a series of smaller thoughts and actions.

Here’s the basic flow:

- Observation: The agent gets a prompt from the user.

- Thought: The LLM thinks about what it needs to do next to answer the prompt. It decides if a tool would help.

- Action: The agent picks a tool (like web search) and uses it.

- Observation (again): It takes the output from the tool and feeds it back into its thought process.

- Repeat: This loop continues until the LLM decides it has enough information to give a final answer.

This back-and-forth is what makes the agent feel intelligent. It’s not just guessing; it’s actively showing its work.

The image below, taken from LangChain’s own documentation, gives you a great visual of how these different building blocks fit together.

As you can see, agents are just one piece of a much larger puzzle that includes models, prompts, and chains. They all work in concert to create these surprisingly powerful AI systems.

Assembling and Running the Agent

Now for the final step: bringing it all together. We do this using an Agent Executor, which is essentially the runtime that manages our agent. It takes the LLM, the tools, and your query, then orchestrates the entire ReAct loop from beginning to end.

Here’s a simplified Python script showing how this looks in practice:

Import necessary libraries

from langchain_openai import ChatOpenAI

from langchain.agents import load_tools, initialize_agent, AgentType

1. Initialize the LLM (the brain)

llm = ChatOpenAI(temperature=0, model_name=”gpt-3.5-turbo”)

2. Load the tools (the hands)

tools = load_tools([“serpapi”, “llm-math”], llm=llm)

3. Initialize the Agent Executor

agent_executor = initialize_agent(

tools,

llm,

agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION,

verbose=True # Set to True to see the agent’s thought process

)

4. Run the agent with a query

query = “What was the score of the last Super Bowl and who won?”

response = agent_executor.run(query)

print(response)

A Quick Tip: When you’re just starting, setting

verbose=Trueis a game-changer. It prints the agent’s entire internal monologue to your console. You can see exactly how it reasons, which tool it chooses, and what it finds. It’s the best way to debug and understand what’s happening under the hood.

When you run this code, you’ll literally see the agent think. It will first realize it needs to search the web for “last Super Bowl score,” then it will run the search, analyze the results, and craft a perfect, final answer.

The Power of Agents in Historical Context

What we just did in a few lines of code is pretty remarkable, and it stands on the shoulders of decades of AI research. Back in the 1990s and early 2000s, AI agents were mostly academic concepts. But thanks to massive leaps in computing power and data availability, they started becoming real.

Think about IBM’s Deep Blue beating a chess grandmaster in 1997, or the Roomba becoming the first successful home robot in 2002. By 2011, IBM’s Watson dominated Jeopardy! and Apple launched Siri, making virtual assistants a household name.

By following these steps, you haven’t just built a cool little AI agent—you’ve gained a hands-on understanding of the entire development workflow. You’ve seen how to merge an LLM’s powerful reasoning with practical, real-world tools to create something that can solve problems on its own. This knowledge is your key to building much more specialized and advanced agents in the future.

Making Your AI Agent Reliable and Efficient

Getting a prototype agent up and running is a huge milestone. It’s exciting! But now comes the real work: turning that cool experiment into a professional-grade agent people can actually count on. This is what separates a fun weekend project from a genuinely useful tool.

It takes more than just clean code. You need to be thoughtful about how the agent thinks, how it bounces back from errors, and how it manages its own resources. An agent that constantly fails, gets stuck in loops, or quietly racks up a massive API bill isn’t just ineffective—it’s a liability. Let’s dig into the practices that will make your creations robust and ready for the real world.

Sharpening Your Agent’s Reasoning with Prompt Engineering

The quality of your agent’s “thinking” comes down to the quality of your instructions. This is where prompt engineering is absolutely critical. It’s the art and science of writing instructions that guide the LLM’s reasoning process, making sure its outputs are consistent, accurate, and on-brand with your goals.

Instead of giving a vague, one-line command, a well-engineered prompt is much more specific. It might include:

- A Clear Role or Persona: “You are an expert research assistant specializing in financial data.” For an AI girlfriend, this would be: “You are a warm, witty, and empathetic partner. Your name is Chloe.”

- Specific Constraints: “Your final answer must be a single, concise paragraph. Do not include any personal opinions or speculation.” For an AI girlfriend: “Never break character. Always respond from Chloe’s perspective.”

- Examples of Good Output: Show the agent exactly what a successful outcome looks like.

A great prompt acts like guardrails for your agent’s thought process. It doesn’t just tell the agent what to do; it shows it how to think. This dramatically cuts down on the chances of it going off the rails or giving you bizarre, unpredictable results.

Think of it like onboarding a new employee. A vague request like “look into this” will get you a vague report. But clear, detailed instructions with examples will get you high-quality, actionable work. This discipline is fundamental.

Graceful Error Handling for a Resilient Agent

In the real world, things break. APIs time out, web pages change their layout, and sometimes, LLMs just spit out nonsense. An agent that crashes at the first sign of trouble is brittle and completely unreliable. Building in solid error handling is non-negotiable for any serious project.

Your agent needs a game plan for when one of its tools fails. Instead of just giving up, it should be able to:

- Retry the Action: Often, the problem is just a temporary network glitch. A simple retry mechanism can fix a surprising number of issues.

- Try a Different Tool: If one search API is down, can the agent pivot to a backup?

- Ask for Clarification: If the user’s initial request was ambiguous, the agent should ask for more information instead of just making a bad guess.

This kind of resilience makes your agent feel far more intelligent and trustworthy. It can navigate the messy, unpredictable nature of real-world data without falling apart.

Keeping Costs Under Control

AI agents, especially ones using powerful models like GPT-4, can get expensive in a hurry. Every single call to the LLM has a price tag, and an inefficient agent can burn through your budget before you even realize it. You have to be smart about cost management from day one.

Here are a few key strategies to implement immediately:

- Model Selection: Does your task really need the most powerful, expensive model available? For an AI girlfriend, a faster, cheaper model often provides a better, more responsive chat experience.

- Caching: If your agent gets the same questions repeatedly, set up a caching layer. This stores the answers to common queries so you don’t have to pay the LLM to generate the same response over and over again.

- Efficient Tool Design: Design your tools to be lean. A tool that returns a massive, unfiltered chunk of data forces the LLM to do more work (and make more API calls) just to find the piece of information it needs.

The Critical Role of Human Oversight

At the end of the day, even the most sophisticated agent can make mistakes. For any critical task, building a human-in-the-loop (HITL) validation system is essential for both safety and performance. This simply means creating checkpoints in the agent’s workflow where a human can review, approve, or correct its proposed actions before they’re executed.

This is especially important in the early days of an agent’s deployment. The feedback you gather from these human reviewers is pure gold. This data is invaluable for improving the agent over time. You can learn more about how this feedback directly improves model behavior in our guide on Reinforcement Learning from Human Feedback, a technique that uses human input to fine-tune AI systems.

As your agent gets more and more reliable, you can start to dial back the level of human oversight required.

Common Questions About Building AI Agents

As you start your journey into building AI agents, you’re bound to hit some snags and have a lot of questions. That’s just part of the process. I’ve been there, and I’ve seen countless developers run into the same roadblocks. Let’s walk through some of the most common questions and give you the straightforward answers you need to keep moving forward.

One of the first major hurdles is getting your agent to do more than just simple, one-off tasks. It’s easy to get a basic agent running, but what about complex, multi-step workflows? This is where many people get stuck.

The key is to look beyond a simple ReAct agent architecture. For more intricate jobs, you’ll want to explore planning agents. Frameworks like LangChain have built-in implementations like Plan-and-Execute, which are perfect for this. The agent first creates a detailed, step-by-step plan and then tackles each part one by one. This is a much more reliable way to handle long-horizon tasks.

Understanding Agent Limitations

Let’s be real: the biggest challenge you’ll face with today’s AI agents is their reliability and brittleness. It’s incredibly frustrating, but you will absolutely see your agent get stuck in a loop, misuse a tool, or “hallucinate” a plan that makes zero sense. It happens to all of us.

This is exactly why building in robust error handling is non-negotiable. You need to get good at prompt engineering and, for anything important, seriously consider having a human-in-the-loop. For an AI girlfriend, this could mean flagging conversations that go off-topic or become incoherent, allowing you to fine-tune the persona.

Can You Build an Agent Without Frameworks?

Yes, you absolutely can build an AI agent from scratch without using a framework like LangChain or LlamaIndex. It involves talking directly to an LLM’s API, managing the prompt loops yourself, parsing the model’s output to figure out which tool to call, and then executing those tool calls.

But frameworks exist for a very good reason. They take care of all that repetitive, boilerplate “scaffolding” for you.

Using a framework saves a massive amount of development time. It frees you up to focus on what makes your agent special—its unique logic and purpose—instead of reinventing the foundational plumbing that everything runs on.

This holds true no matter what kind of interactive agent you’re building. Interestingly, even in more specialized areas like AI companionship, the same questions about capabilities and limitations pop up. If you’re building any kind of persona-driven agent, you might find some useful parallels in our article covering the top 25 questions about AI girlfriends.

How to Control AI Agent Costs

Cost is a huge concern for anyone working with LLM APIs. Those calls can get expensive, fast. The best defense is a multi-layered strategy to keep your budget in check.

Here are the tactics I always use:

- Use the Right-Sized Model: Don’t default to the biggest, most expensive model. For an AI girlfriend app with thousands of users, a fast and cost-effective model is essential for a sustainable business.

- Implement Caching: This is a must. If you get the same query twice, you shouldn’t have to pay for the same answer twice. Cache previous results and reuse them.

- Optimize Your Tools: Design your tools and the prompts that trigger them to be as efficient as possible. The goal is to minimize the number of LLM calls needed to complete any given task.

- Monitor Usage: Set up monitoring and alerts for your API key. This is your early warning system to catch any surprise spending spikes before they become a real problem.

Ready to explore the fascinating world of AI companions? At AI Girlfriend Review Inc., we provide in-depth reviews, guides, and insights to help you find the perfect AI girlfriend app for your needs. Discover the best options and make an informed choice by visiting us at https://www.aigirlfriendreview.com.